Toxic comments, offensive memes, and spam are a daily battle for anyone managing an online community. As user-generated content explodes across platforms, content moderation is no longer optional. It’s mission-critical.

In this guide, we’ll break down smart content moderation strategies, essential tools, and workflows to help you protect your brand, your users, and your peace of mind.

Whether you’re running a Facebook group, mobile app, or brand page, this is your go-to playbook for 2025 and beyond.

Understanding Content Moderation

Content moderation involves reviewing and managing what people post online, including comments, images, and videos, to ensure it adheres to the platform’s rules. This is especially important for websites and apps that allow user-generated content.

Effective content moderation fosters safe online communities where users feel welcome and respected. It also protects the platform’s image (brand safety), ensures user satisfaction (user experience), and ensures the platform complies with the law (legal compliance).

Using the right tools, such as social media content filtering tools, and setting clear community moderation guidelines are key.

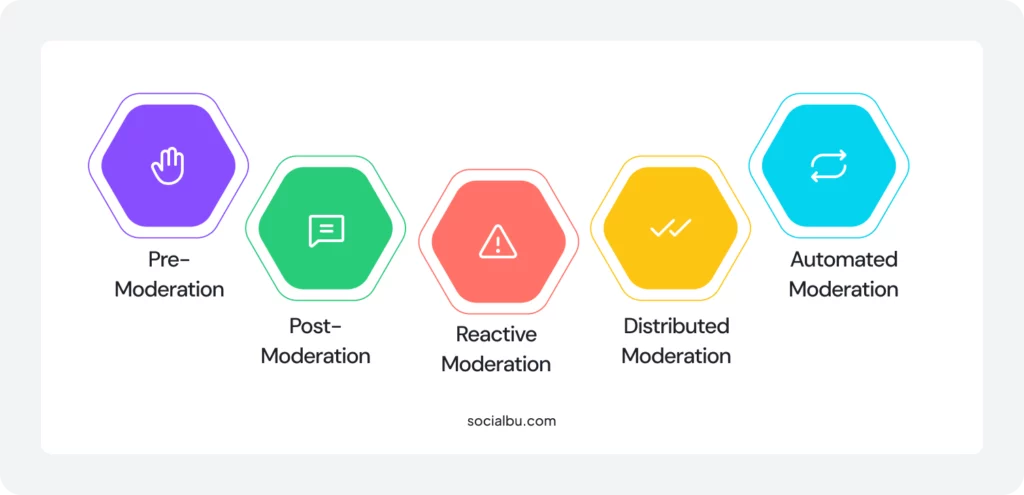

Types of Content Moderation

To maintain safe online communities, platforms employ various types of content moderation. Each type has its own method, depending on how and when content is reviewed.

Here are the main types:

1. Pre-Moderation

In pre-moderation, all user-generated content is checked before it goes live. A moderator or an AI filtering system reviews the content first.

2. Post-Moderation

Here, content is published immediately, but is checked after it goes live. Moderators or automated systems remove anything that breaks the moderation policy.

3. Reactive Moderation

With this method, users help out by flagging and reporting bad content. Then, moderators review the flagged posts.

4. Distributed Moderation

In this type, the community itself reviews and moderates content. Members vote or give feedback to approve or reject posts.

5. Automated Moderation

This utilizes software or AI filtering to automatically detect and remove harmful content. It works 24/7 and handles large volumes of posts.

Most platforms employ a combination of these methods to establish a robust moderation workflow that safeguards content and ensures a positive user experience.

Content Moderation Strategies: Tools and Techniques for Online Communities

Content moderation helps keep online spaces safe, respectful, and enjoyable. It involves checking and managing user-generated content, such as comments, photos, or videos, to ensure compliance with community rules.

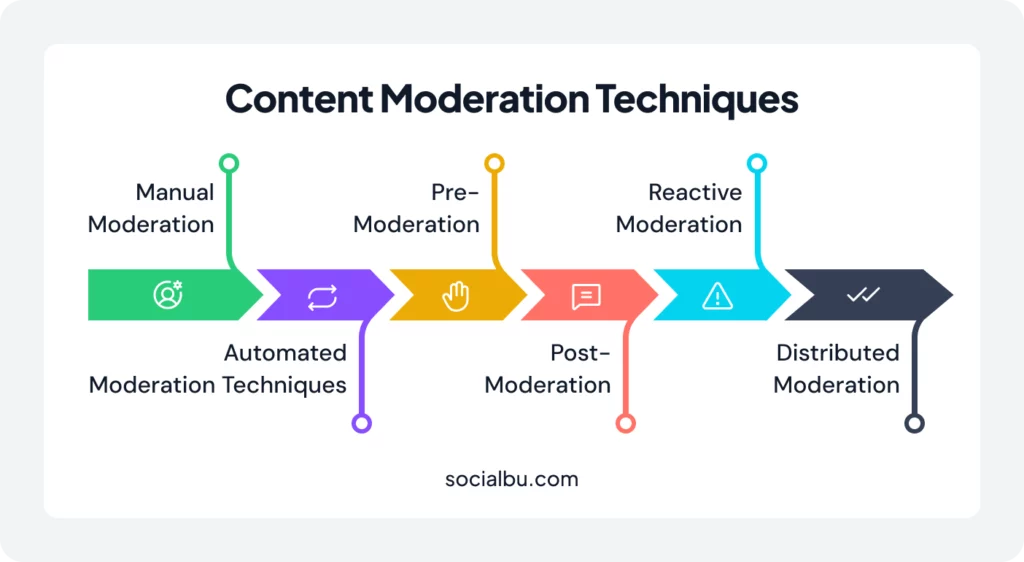

Content Moderation Techniques

Below are key content moderation techniques used by platforms:

1. Manual Moderation

This is when real people (moderators) review the content themselves.

- Strengths: Humans understand context, tone, and emotions better than machines. This helps with nuanced situations.

- Limitations: It takes time and effort, especially on large platforms. It’s hard to scale as the community grows.

Best for: Complex or sensitive situations where empathy matters.

2. Automated Moderation Techniques

This uses AI filtering, machine learning, and Natural Language Processing (NLP) to detect and filter harmful or rule-breaking content quickly.

- Can scan text, images, audio, and video in real time.

- Tools include:

- Keyword blacklists (blocking bad words),

- Sentiment analysis (detecting anger or hate),

- Behavior scoring (flagging suspicious accounts).

3. Pre-Moderation

Content is reviewed before it appears online.

- Suitable for sensitive audiences, such as children or health-related platforms.

- Slower for users, but safer for the community.

Prevents harmful content from going public.

Matches well with platforms needing safe online communities.

4. Post-Moderation

Content goes live first, and moderators check it after.

Allows users to post freely while keeping control.

Risk: Harmful content may be seen briefly before removal.

Balances user freedom with safety.

5. Reactive Moderation

Users help by flagging and reporting inappropriate content.

- The platform only acts after a report is submitted.

- Quick response depends on how active and responsible the community is.

Empowers users to help moderate.

Relates to: flagging and reporting, moderation policy.

6. Distributed Moderation

The community itself moderates content using votes, reviews, or reputation systems.

- Seen in places like Reddit (upvotes/downvotes).

- Moderation power is shared with trusted users.

Scales well and builds community trust.

Ties into: community moderation guidelines, safe online communities.

Best Practices

- Use a mix of techniques for better moderation workflows.

- Set clear moderation policies and community guidelines.

- Involve both tech (AI) and people (moderators or users).

- Always strive for safe and inclusive online communities.

Content Moderation Tools

Content moderation tools help keep online spaces safe by reviewing and managing user-generated content. These tools are essential for building safe online communities and following clear community moderation guidelines.

1. Social Media Moderation Tools

These are tools used by platforms like Facebook, Instagram, or forums to manage content. They can be built-in (in-house systems) or provided by third-party platforms.

Features include:

1. AI Filtering: Automatically detects and removes harmful or inappropriate content using advanced automated moderation techniques. It can catch things like hate speech, nudity, or spam before anyone sees it.

2. Flagging and Reporting: Users can report posts that are harmful. Moderators then review these reports.

3. Moderator Dashboards: Tools where human moderators see reports, apply moderation best practices, and make decisions based on moderation policy.

4. Analytics: Display data on the types of content flagged most frequently, the frequency of moderation, and more, enabling teams to refine their moderation workflows.

2. Integration Tools

These tools help connect moderation systems to websites, mobile apps, or content management systems (CMS).

APIs and automation tools:

- Automatically send content for review as soon as it’s posted.

- Trigger filters or moderation workflows in real-time.

- Make moderation part of your app without building everything from scratch.

3. Reporting and Escalation Tools

Sometimes, content requires urgent attention, such as threats or harmful behavior. These tools help moderators act fast.

Key Features:

- Workflow tools: Sort content by urgency, type, or violation.

- Escalation protocols: If a serious issue is identified, the system forwards it to a higher-level moderator or specialist for review.

- Support for large moderator teams, enabling them to collaborate and adhere to moderation best practices.

Now that we’ve seen what top social media moderation tools offer, let’s move forward and talk about a tool that brings it all together, from scheduling and publishing to analytics and advanced content moderation.

Spotlight Tool: SocialBu for Content Moderation

SocialBu isn’t just a scheduling tool; it also helps with community moderation guidelines, automated moderation techniques, and moderation workflows.

Here’s how:

1. Unified Social Inbox

- Collects all messages, comments, mentions, and reviews from different platforms (Facebook, Instagram, Twitter (X), LinkedIn, Google My Business) into one dashboard.

- Moderators can quickly spot concerns and apply the moderation policy.

2. AI Filtering & Automated Responses

- Uses automated moderation techniques to detect keywords or negative reviews by monitoring keywords and hashtags.

- Offers auto-replies or pre-set moderation actions, which help maintain safe conversations with minimal delay.

3. Moderation Workflows

- Supports automation rules, such as auto-posting and auto-responding to flagged content, which is an essential part of moderation workflows.

4. Analytics & Reporting

- Tracks metrics like engagement and flagged content trends, helping teams improve moderation best practices over time.

- Works with moderator dashboards and analytics to identify the types of content that are most frequently reported or problematic.

This demonstrates that SocialBu is utilized for automated engagement and managing sensitive content.

TL;DR

SocialBu acts as a social media moderation tool by:

- Centralizing messages/comments

- Using AI filtering and keyword monitoring

- Automating moderation steps for flagged content

- Providing analytics to refine moderation best practices

Perfect for teams seeking to establish secure online communities with effective moderation workflows, utilizing community moderation guidelines.

Here are some other Social media moderation tools that you can try to make a safe space for online communities.

Google Perspective API

This tool uses automated moderation techniques powered by machine learning to evaluate the “toxicity” of comments. It’s especially useful for moderating discussions and improving brand safety by preventing hate speech and abuse.

Hive Moderation

Hive provides robust AI filtering for text, images, audio, and video. It uses deep learning models trained on large datasets and is widely employed by large-scale platforms for real-time content moderation and the detection of policy violations.

WebPurify

WebPurify offers both AI-powered and human moderation services, including image, video, and profanity filtering. It supports real-time API integration, helping brands maintain legal compliance and community trust.

By using a combination of these content moderation tools, you can apply smart, scalable moderation best practices, ensure brand safety, and support thriving user-generated content communities.

Do’s and Don’ts For Content Moderation

To keep safe online communities and improve moderation workflows, follow these best practices:

Do’s | Don’ts |

Set clear Community Moderation Guidelines | Rely only on automation |

Use a mix of Moderation Techniques | Ignore feedback from users |

Monitor analytics regularly | Apply rules inconsistently |

Train your moderation team | Delay moderation actions |

Protect your moderators | Forget global and cultural context |

Stay legally compliant | Over-censor |

Future Trends in Content Moderation

- Smarter AI Filtering

- Emotion and Sentiment Detection

- Community-Led Moderation

- Predictive Moderation

- Mental Health Tools for Moderators

- Real-Time Moderation Integration

- Global Moderation Policies

Conclusion

Content moderation is essential for creating safe online communities. With the rise of user-generated content, platforms require a strategic blend of social media moderation tools, automated moderation techniques, and robust community moderation guidelines.

By utilizing tools such as AI filtering, flagging, and reporting, and establishing clear moderation workflows, platforms can maintain respectful, enjoyable, and legally safe spaces. Whether it’s done by people, AI, or the community itself, using moderation best practices ensures a better online experience for everyone.

The future of content moderation is smarter, faster, and more community-driven. Stay updated, stay safe, and if you’re looking for an all-in-one solution to manage comments, automate moderation, and protect your brand online…

Try SocialBu to streamline your content moderation and build a safer, more engaging online community today.

FAQs

Q: What are the best tools for content moderation?

Tools like SocialBu, Microsoft Content Moderator, and Google Perspective API are great. They offer AI filtering, dashboards, and automation features to manage user-generated content easily.

Q: How do you set moderation policies?

Create clear community moderation guidelines that clearly explain what is allowed and what isn’t. Make them easy to understand and apply them consistently across all content.

Q: Can AI automate moderation effectively?

Yes, AI filtering can efficiently handle large volumes of content. It works best when combined with human moderators for complex or sensitive posts.

Q: How do you balance moderation and free speech?

Use moderation best practices: be transparent, avoid over-censorship, and focus on safety. Let users express themselves while stopping harmful content with fair moderation policies.